-

- Description:

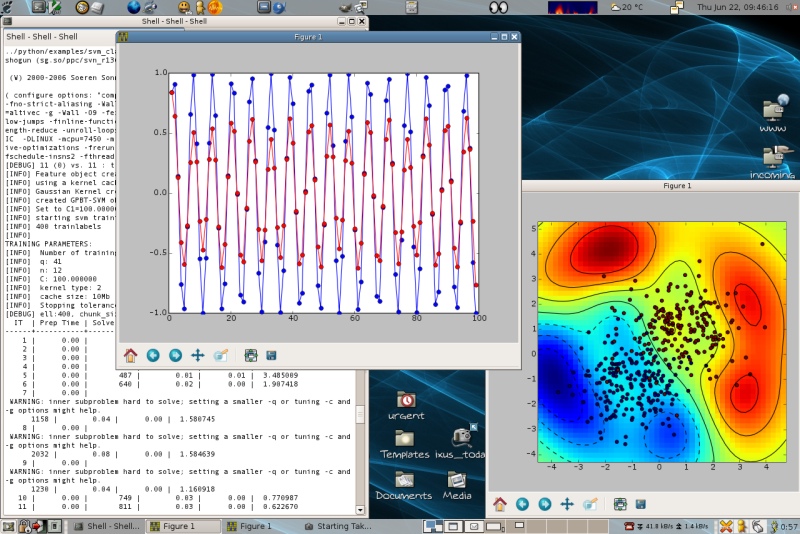

The SHOGUN machine learning toolbox's focus is on large scale kernel methods and especially on Support Vector Machines (SVM). It comes with a generic interface for SVMs, features several SVM and kernel implementations, includes LinAdd optimizations and also Multiple Kernel Learning algorithms. SHOGUN also implements a number of linear methods. It allows the input feature-objects to be dense, sparse or strings and of type int/short/double/char.

The toolbox not only provides efficient implementations of the most common kernels, like the

- Linear,

- Polynomial,

- Gaussian and

- Sigmoid Kernel

but also comes with a number of recent string kernels as e.g. the

- Locality Improved,

- Fischer,

- TOP,

- Spectrum,

- Weighted Degree Kernel (with shifts).

For the latter the efficient LINADD optimizations are implemented. Also SHOGUN offers the freedom of working with custom pre-computed kernels. One of its key features is the combined kernel which can be constructed by a weighted linear combination of a number of sub-kernels, each of which not necessarily working on the same domain. An optimal sub-kernel weighting can be learned using Multiple Kernel Learning. Currently SVM 2-class classification and regression problems can be dealt with. However SHOGUN also implements a number of linear methods like

- Linear Discriminant Analysis (LDA)

- Linear Programming Machine (LPM),

- (Kernel) Perceptrons and features algorithms to train hidden markov models.

The input feature-objects can be

- dense

- sparse or

- strings and of type int/short/double/char

and can be converted into different feature types. Chains of preprocessors (e.g. substracting the mean) can be attached to each feature object allowing for on-the-fly pre-processing.

SHOGUN is implemented in C++ and interfaces to Matlab(tm), R, Octave and Python.

- Changes to previous version:

This release contains major feature enhancements and bugfixes:

- Implement DotFeatures and CombinedDotFeatures. DotFeatures need to provide dot-product and similar operations (hence the name). This enables training of linear methods with mixed datatypes (sparse and dense and other even the newly implemented string based SpecFeatures and WDFeatures).

- MKL now does not require CPLEX any longer.

- Add q-norm MKL support based on internal Newton implementation.

- Add 1-norm MKL support based on GLPK.

- Add multiclass MKL support based on the GLPK and the GMNP svm solver.

- Implement Tensor Product Pair Kernel (TPPK).

- Support compilation on the iPhone :)

- Add an option to set wds kernel position weights.

- Build static libshogun.a for libshogun target.

- Testsuite can also test the modular R interface, added test for OligoKernel.

- Ocas and WDOcas can be used with a bias feature now.

- Update to LibSVM 2.88.

- Enable parallelized HMM code by default.

- BibTeX Entry: Download

- Corresponding Paper BibTeX Entry: Download

- Supported Operating Systems: Cygwin, Linux, Macosx

- Data Formats: Plain Ascii, Svmlight

- Tags: Bioinformatics, Large Scale, String Kernel, Kernel, Kernelmachine, Lda, Lpm, Matlab, Mkl, Octave, Python, R, Svm

- Archive: download here

Comments

-

- Soeren Sonnenburg (on September 12, 2008, 16:14:36)

- In case you find bugs, feel free to report them at [http://trac.tuebingen.mpg.de/shogun](http://trac.tuebingen.mpg.de/shogun).

-

- Tom Fawcett (on January 3, 2011, 03:20:48)

- You say, "Some of them come with no less than 10 million training examples, others with 7 billion test examples." I'm not sure what this means. I have problems with mixed symbolic/numeric attributes and the training example sets don't fit in memory. Does SHOGUN require that training examples fit in memory?

-

- Soeren Sonnenburg (on January 14, 2011, 18:12:01)

- Shogun does not necessarily require examples to be in memory (if you use any of the FileFeatures). However, most algorithms within shogun are batch type - so using the non in-memory FileFeatures would probably be very slow. This does not matter for doing predictions of course, even though the 7 billion test examples above referred to predicting gene starts on the whole human genome (in memory ~3.5GB and a context window of 1200nt was shifted around in that string). In addition one can compute features (or feature space) on-the-fly potentially saving lots of memory. Not sure how big your problem is but I guess this is better discussed on the shogun mailinglist.

-

- Yuri Hoffmann (on September 14, 2013, 17:12:16)

- cannot use the java interface in cygwin (already reported on github) nor in debian.

Leave a comment

You must be logged in to post comments.

Project details for SHOGUN