-

- Description:

Overview

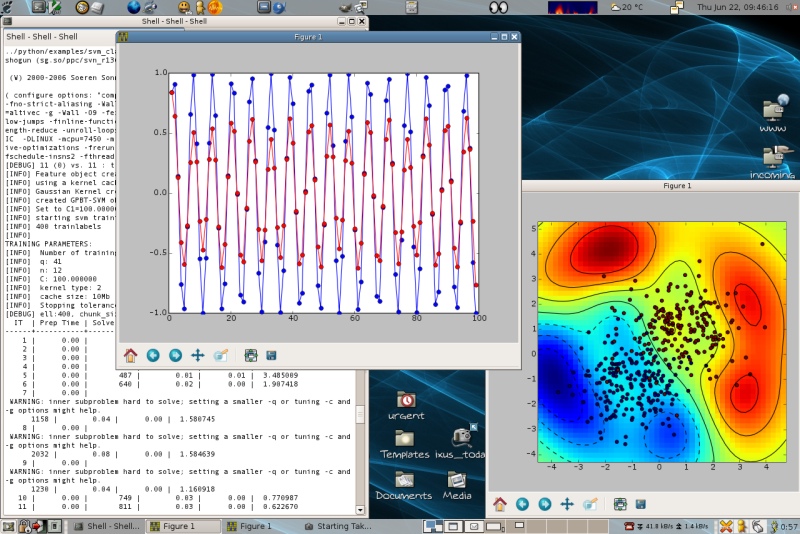

The SHOGUN machine learning toolbox's focus is on large scale kernel methods and especially on Support Vector Machines (SVM). It comes with a generic interface for kernel machines and features 15 different SVM implementations that all access features in a unified way via a general kernel framework or in case of linear SVMs so called "DotFeatures", i.e., features providing a minimalistic set of operations (like the dot product).

Features

SHOGUN includes the LinAdd accelerations for string kernels and the COFFIN framework for on-demand computing of features for the contained linear SVMs. In addition it contains more advanced Multiple Kernel Learning, Multi Task Learning and Structured Output learning algorithms and other linear methods. SHOGUN digests input feature-objects of basically any known type, e.g., dense, sparse or variable length features (strings) of any type char/byte/word/int/long int/float/double/long double.

The toolbox provides efficient implementations to 35 different kernels among them the

- Linear,

- Polynomial,

- Gaussian and

- Sigmoid Kernel

and also provides a number of recent string kernels like the

- Locality Improved,

- Fischer,

- TOP,

- Spectrum,

- Weighted Degree Kernel (with shifts) .

For the latter the efficient LINADD optimizations are implemented. Also SHOGUN offers the freedom of working with custom pre-computed kernels. One of its key features is the combined kernel which can be constructed by a weighted linear combination of a number of sub-kernels, each of which not necessarily working on the same domain. An optimal sub-kernel weighting can be learned using Multiple Kernel Learning. Currently SVM one-class, 2-class, multi-class classification and regression problems are supported. However SHOGUN also implements a number of linear methods like

- Linear Discriminant Analysis (LDA)

- Linear Programming Machine (LPM),

- Perceptrons and features algorithms to train Hidden Markov Models.

The input feature-objects can be read from plain ascii files (tab separated values for dense matrices; for sparse matrices libsvm/svmlight format), a efficient native binary format and general support to the hdf5 based format, supporting

- dense

- sparse or

- strings of various types

that can often be converted between each other. Chains of preprocessors (e.g. subtracting the mean) can be attached to each feature object allowing for on-the-fly pre-processing.

Structure and Interfaces

SHOGUN's core is implemented in C++ and is provided as a library libshogun to be readily usable for C++ application developers. Its common interface functions are encapsulated in libshogunui, such that only minimal code (like setting or getting a double matrix to/from the target language) is necessary. This allowed us to easily create interfaces to Matlab(tm), R, Octave and Python. (note that a modular object oriented and static interfaces are provided to r, octave, matlab, python, python_modular, r_modular, octave_modular, cmdline, libshogun).

Application

We have successfully applied SHOGUN to several problems from computational biology, such as Super Family classification, Splice Site Prediction, Interpreting the SVM Classifier, Splice Form Prediction, Alternative Splicing and Promoter Prediction. Some of them come with no less than 10 million training examples, others with 7 billion test examples.

Documentation

We use Doxygen for both user and developer documentation which may be read online here. More than 600 documented examples for the interfaces python_modular, octave_modular, r_modular, static python, static matlab and octave, static r, static command line and C++ libshogun developer interface can be found in the documentation.

- Changes to previous version:

This release features the work of our 8 GSoC 2014 students [student; mentors]:

- OpenCV Integration and Computer Vision Applications [Abhijeet Kislay; Kevin Hughes]

- Large-Scale Multi-Label Classification [Abinash Panda; Thoralf Klein]

- Large-scale structured prediction with approximate inference [Jiaolong Xu; Shell Hu]

- Essential Deep Learning Modules [Khaled Nasr; Sergey Lisitsyn, Theofanis Karaletsos]

- Fundamental Machine Learning: decision trees, kernel density estimation [Parijat Mazumdar ; Fernando Iglesias]

- Shogun Missionary & Shogun in Education [Saurabh Mahindre; Heiko Strathmann]

- Testing and Measuring Variable Interactions With Kernels [Soumyajit De; Dino Sejdinovic, Heiko Strathmann]

- Variational Learning for Gaussian Processes [Wu Lin; Heiko Strathmann, Emtiyaz Khan]

It also contains several cleanups and bugfixes:

Features

- New Shogun project description [Heiko Strathmann]

- ID3 algorithm for decision tree learning [Parijat Mazumdar]

- New modes for PCA matrix factorizations: SVD & EVD, in-place or reallocating [Parijat Mazumdar]

- Add Neural Networks with linear, logistic and softmax neurons [Khaled Nasr]

- Add kernel multiclass strategy examples in multiclass notebook [Saurabh Mahindre]

- Add decision trees notebook containing examples for ID3 algorithm [Parijat Mazumdar]

- Add sudoku recognizer ipython notebook [Alejandro Hernandez]

- Add in-place subsets on features, labels, and custom kernels [Heiko Strathmann]

- Add Principal Component Analysis notebook [Abhijeet Kislay]

- Add Multiple Kernel Learning notebook [Saurabh Mahindre]

- Add Multi-Label classes to enable Multi-Label classification [Thoralf Klein]

- Add rectified linear neurons, dropout and max-norm regularization to neural networks [Khaled Nasr]

- Add C4.5 algorithm for multiclass classification using decision trees [Parijat Mazumdar]

- Add support for arbitrary acyclic graph-structured neural networks [Khaled Nasr]

- Add CART algorithm for classification and regression using decision trees [Parijat Mazumdar]

- Add CHAID algorithm for multiclass classification and regression using decision trees [Parijat Mazumdar]

- Add Convolutional Neural Networks [Khaled Nasr]

- Add Random Forests algorithm for ensemble learning using CART [Parijat Mazumdar]

- Add Restricted Botlzmann Machines [Khaled Nasr]

- Add Stochastic Gradient Boosting algorithm for ensemble learning [Parijat Mazumdar]

- Add Deep contractive and denoising autoencoders [Khaled Nasr]

- Add Deep belief networks [Khaled Nasr]

Bugfixes

- Fix reference counting bugs in CList when reference counting is on [Heiko Strathmann, Thoralf Klein, lambday]

- Fix memory problem in PCA::apply_to_feature_matrix [Parijat Mazumdar]

- Fix crash in LeastAngleRegression for the case D greater than N [Parijat Mazumdar]

- Fix memory violations in bundle method solvers [Thoralf Klein]

- Fix fail in library_mldatahdf5.cpp example when http://mldata.org is not working properly [Parijat Mazumdar]

- Fix memory leaks in Vowpal Wabbit, LibSVMFile and KernelPCA [Thoralf Klein]

- Fix memory and control flow issues discovered by Coverity [Thoralf Klein]

- Fix R modular interface SWIG typemap (Requires SWIG >= 2.0.5) [Matt Huska]

Cleanup and API Changes

- PCA now depends on Eigen3 instead of LAPACK [Parijat Mazumdar]

- Removing redundant and fixing implicit imports [Thoralf Klein]

- Hide many methods from SWIG, reducing compile memory by 500MiB [Heiko Strathmann, Fernando Iglesias, Thoralf Klein]

- BibTeX Entry: Download

- Corresponding Paper BibTeX Entry: Download

- Supported Operating Systems: Cygwin, Linux, Macosx, Bsd

- Data Formats: Plain Ascii, Svmlight, Binary, Fasta, Fastq, Hdf

- Tags: Bioinformatics, Large Scale, String Kernel, Kernel, Kernelmachine, Lda, Lpm, Matlab, Mkl, Octave, Python, R, Svm, Sgd, Icml2010, Liblinear, Libsvm, Multiple Kernel Learning, Ocas, Gaussian Processes, Reg

- Archive: download here

Comments

-

- Soeren Sonnenburg (on September 12, 2008, 16:14:36)

- In case you find bugs, feel free to report them at [http://trac.tuebingen.mpg.de/shogun](http://trac.tuebingen.mpg.de/shogun).

-

- Tom Fawcett (on January 3, 2011, 03:20:48)

- You say, "Some of them come with no less than 10 million training examples, others with 7 billion test examples." I'm not sure what this means. I have problems with mixed symbolic/numeric attributes and the training example sets don't fit in memory. Does SHOGUN require that training examples fit in memory?

-

- Soeren Sonnenburg (on January 14, 2011, 18:12:01)

- Shogun does not necessarily require examples to be in memory (if you use any of the FileFeatures). However, most algorithms within shogun are batch type - so using the non in-memory FileFeatures would probably be very slow. This does not matter for doing predictions of course, even though the 7 billion test examples above referred to predicting gene starts on the whole human genome (in memory ~3.5GB and a context window of 1200nt was shifted around in that string). In addition one can compute features (or feature space) on-the-fly potentially saving lots of memory. Not sure how big your problem is but I guess this is better discussed on the shogun mailinglist.

-

- Yuri Hoffmann (on September 14, 2013, 17:12:16)

- cannot use the java interface in cygwin (already reported on github) nor in debian.

Leave a comment

You must be logged in to post comments.

Project details for SHOGUN