-

- Description:

The Weka workbench contains a collection of visualization tools and algorithms for data analysis and predictive modelling, together with graphical user interfaces for easy access to this functionality. The main strengths of Weka are that it is freely available under the GNU General Public License, very portable because it is fully implemented in the Java programming language and thus runs on almost any computing platform, contains a comprehensive collection of data preprocessing and modeling techniques, and is easy to use by a novice due to the graphical user interfaces it contains.

Weka supports several standard data mining tasks, more specifically, data preprocessing, clustering, classification, regression, visualization, and feature selection. All of Weka's techniques are predicated on the assumption that the data is available as a single flat file or relation, where each data point is described by a fixed number of attributes (normally, numeric or nominal attributes, but some other attribute types are also supported). Weka provides access to SQL databases using Java Database Connectivity and can process the result returned by a database query. It is not capable of multi-relational data mining, but there is separate software for converting a collection of linked database tables into a single table that is suitable for processing using Weka. Another important area that is currently not covered by the algorithms included in the Weka distribution is sequence modeling.

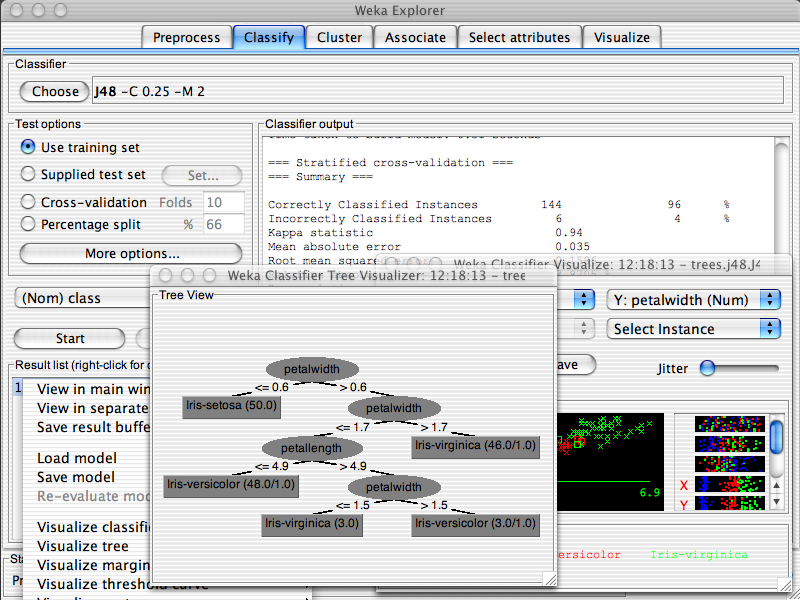

Weka's main user interface is the Explorer, but essentially the same functionality can be accessed through the component-based Knowledge Flow interface and from the command line. There is also the Experimenter, which allows the systematic comparison of the predictive performance of Weka's machine learning algorithms on a collection of datasets.

The Explorer interface has several panels that give access to the main components of the workbench. The Preprocess panel has facilities for importing data from a database, a CSV file, etc., and for preprocessing this data using a so-called filtering algorithm. These filters can be used to transform the data (e.g., turning numeric attributes into discrete ones) and make it possible to delete instances and attributes according to specific criteria. The Classify panel enables the user to apply classification and regression algorithms (indiscriminately called classifiers in Weka) to the resulting dataset, to estimate the accuracy of the resulting predictive model, and to visualize erroneous predictions, ROC curves, etc., or the model itself (if the model is amenable to visualization like, e.g., a decision tree). Weka contains many of the latest sophisticated methods, such as support vector machines, gaussian processes, random forests, but also classic methods like C4.5, ANNs, bagging, boosting, etc. The Associate panel provides access to association rule learners that attempt to identify all important interrelationships between attributes in the data. The Cluster panel gives access to the clustering techniques in Weka, e.g., the simple k-means algorithm. There is also an implementation of the expectation maximization algorithm for learning a mixture of normal distributions. The next panel, Select attributes provides algorithms for identifying the most predictive attributes in a dataset. The last panel, Visualize, shows a scatter plot matrix, where individual scatter plots can be selected and enlarged, and analyzed further using various selection operators.

What's new since version 3.6.0?

- Package management system

- Support for importing PMML support vector machines

- Denormalize filter for flattening transactional data

- SGD stochastic gradient descent for learning binary SVMs, logistic regression and linear regression (can be trained incrementally)

- scatterPlot3D - a new package that adds a 3D scatter plot visualization to the Explorer

- associationRulesVisualizer - a new package that adds a 3D visualization of association rules to Associations panel of the Explorer

- massiveOnlineAnalysis - a new connector package for the MOA data stream learning tool

- Tabu search method for feature selection

- EM-based imputation of missing values

- Speed improvements for random trees, which in turn lead faster random forests (up to an order of magnitude faster)

- Support for importing PMML decision trees

- Apriori can make use of sparse instances for market basket type data

- Support for using environment variables in the Knowledge Flow

- Support for Groovy scripting

- Improvements to the loading of CSV files

- Enhanced plugin support in the Explorer's Classify panel

- Filter for sorting the labels of nominal attributes

- Information on instance weights displayed in the Preprocess panel of the Explorer

- Wrapper feature selection now supports mae, f-measure, auc, rmse (probabilities), mae (probabilities) as well as the original error rate and rmse

- Converters to read and write Matlab's ASCII file format

- FPGrowth association rule learner

- SPegasos algorithm for learning linear support vector machines via stochastic gradient descent

- PMML decision trees and rule set models

- Cost/benefit analysis component

- Support for parallel processing in some meta learners

- FURIA rule learner

- Friedman's RealAdaBoost algorithm

- HierarchicalClusterer, implementing a number of hierarchical clustering techniques

- Improved and faster version of Gaussian process regression

- Evaluation routines for conditional density estimates and interval estimators

- OneClassClassifier

- Architectural changes: Classifier and Instance are now interfaces; core does not depend on FastVector any more

- SGDText - stochastic gradient descent for learning linear SVMs and logistic regression for text problems. Operates incrementally and directly on string attributes.

- MITI multi-instance tree learner and MIRI rule learner variant.

- sasLoader - SAS sas7bdat file reader.

- wekaServer - A simple servlet-based server for executing data mining tasks.

- Incremental version of the multi-class meta classifier.

- NaiveBayesMultinomialText - version of multinomial NB that operates directly on string attributes.

- Integration with the R statistical environment

- Local Outlier Factor (LOF) anomaly detection filter

- Isolation Forests outlier detection

- Multilayer perceptrons package

- HoeffdingTree. Ported from the MOA implementation to a Weka classifier

- MergeInfrequentNominalValues filter

- MergeNominalValuesFilter. Uses an CHAID-style merging routine

- Zoom facility in the Knowledge Flow

- Epsilon-insensitive and Huber loss functions in SGD

- More CSVLoader improvements

- Class specific IR metric based evaluation in WrapperSubsetEval

- GainRatioAttributeEval now supports instance weights

- New command line option to force batch training mode when the classifier is an incremental one

- LinearRegression is now faster and more memory efficient thanks to a contribution from Sean Daugherty

- CfsSubsetEval can now use multiple CPUs/cores to pre-compute the correlation matrix (speeds up backward searches)

- GreedyStepwise can now evaluate mutliple subsets in parallel

- New kernelLogisticRegression package

- New supervisedAttributeScaling package

- New clojureClassifier package

- localOutlierFactor now includes a wrapper classifier that uses the LOF filter

- scatterPlot3D now includes new Java3D libraries for all major platforms

- New IWSS (Incremental Wrapper Subset Selection) package contributed by Pablo Bermejo

- New MODLEM package (rough set theory based rule induction) contributed by Szymon Wojciechowski

[1] Ian H. Witten; Eibe Frank (2005). Data Mining: Practical machine learning tools and techniques, 2nd Edition. Morgan Kaufmann, San Francisco.

[2] G. Holmes; A. Donkin and I.H. Witten (1994). Weka: A machine learning workbench. Proc Second Australia and New Zealand Conference on Intelligent Information Systems, Brisbane, Australia.

[3] S.R. Garner; S.J. Cunningham, G. Holmes, C.G. Nevill-Manning, and I.H. Witten (1995). Applying a machine learning workbench: Experience with agricultural databases. Proc Machine Learning in Practice Workshop, Machine Learning Conference, Tahoe City, CA, USA 14-21.

[4] P. Reutemann; B. Pfahringer and E. Frank (2004). Proper: A Toolbox for Learning from Relational Data with Propositional and Multi-Instance Learners. 17th Australian Joint Conference on Artificial Intelligence (AI2004). Springer-Verlag.

[5] Ian H. Witten; Eibe Frank, Len Trigg, Mark Hall, Geoffrey Holmes, and Sally Jo Cunningham (1999). Weka: Practical Machine Learning Tools and Techniques with Java Implementations. Proceedings of the ICONIP/ANZIIS/ANNES'99 Workshop on Emerging Knowledge Engineering and Connectionist-Based Information Systems 192-196.

[6] Gregory Piatetsky-Shapiro (2005-06-28). KDnuggets news on SIGKDD Service Award 2005.

- Changes to previous version:

In core weka:

- GUIChooser now has a plugin exension point that allows implementations of GUIChooser.GUIChooserMenuPlugin to appear as entries in either the Tools or Visualization menus

- SubsetByExpression filter now has support for regexp matching

- weka.classifiers.IterativeClassifierOptimizer - a classifier that can efficiently optimize the number of iterations for a base classifier that implements IterativeClassifier

- Speedup for LogitBoost in the two class case

- weka.filters.supervised.instance.ClassBalancer - a simple filter to balance the weight of classes

- New class hierarchy for stopwords algorithms. Includes new methods to read custom stopwords from a file and apply multiple stopwords algorithms

- Ability to turn off capabilities checking in Weka algorithms. Improves runtime for ensemble methods that create a lot of simple base classifiers

- Memory savings in weka.core.Attribute

- Improvements in runtime for SimpleKMeans and EM

- weka.estimators.UnivariateMixtureEstimator - new mixture estimator

In packages:

- New discriminantAnalysis package. Provides an implementation of Fisher's linear discriminant analysis

- Quartile estimators, correlation matrix heat map and k-means++ clustering in distributed Weka

- Support for default settings for GridSearch via a properties file

- Improvements in scripting with addition of the offical Groovy console (kfGroovy package) from the Groovy project and TigerJython (new tigerjython package) as the Jython console via the GUIChooser

- Support for the latest version of MLR in the RPlugin package

- EAR4 package contributed by Vahid Jalali

- StudentFilters package contributed by Chris Gearhart

- graphgram package contributed by Johannes Schneider

- BibTeX Entry: Download

- Corresponding Paper BibTeX Entry: Download

- Supported Operating Systems: Cygwin, Linux, Macosx, Windows

- Data Formats: None

- Tags: Association Rules, Attribute Selection, Classification, Clustering, Preprocessing, Regression

- Archive: download here

Comments

No one has posted any comments yet. Perhaps you'd like to be the first?

Leave a comment

You must be logged in to post comments.

Project details for WEKA