-

- Description:

Overview

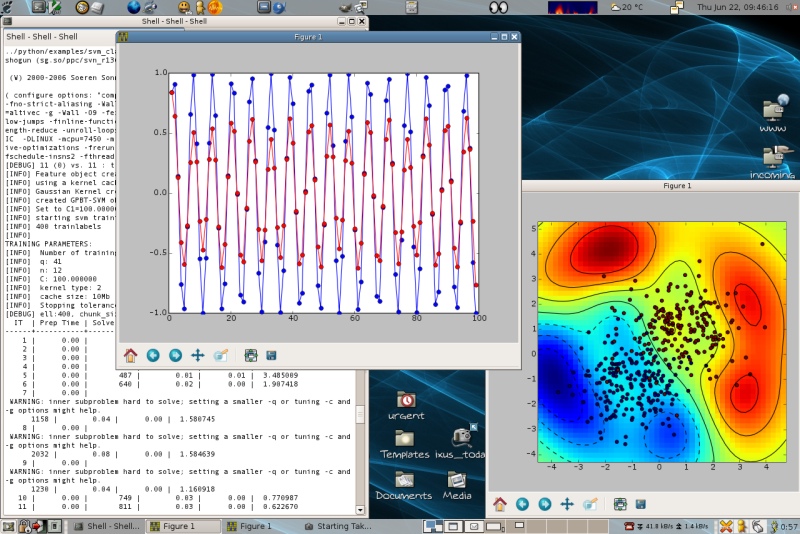

The SHOGUN machine learning toolbox's focus is on large scale kernel methods and especially on Support Vector Machines (SVM). It comes with a generic interface for kernel machines and features 15 different SVM implementations that all access features in a unified way via a general kernel framework or in case of linear SVMs so called "DotFeatures", i.e., features providing a minimalistic set of operations (like the dot product).

Features

SHOGUN includes the LinAdd accelerations for string kernels and the COFFIN framework for on-demand computing of features for the contained linear SVMs. In addition it contains more advanced Multiple Kernel Learning, Multi Task Learning and Structured Output learning algorithms and other linear methods. SHOGUN digests input feature-objects of basically any known type, e.g., dense, sparse or variable length features (strings) of any type char/byte/word/int/long int/float/double/long double.

The toolbox provides efficient implementations to 35 different kernels among them the

- Linear,

- Polynomial,

- Gaussian and

- Sigmoid Kernel

and also provides a number of recent string kernels like the

- Locality Improved,

- Fischer,

- TOP,

- Spectrum,

- Weighted Degree Kernel (with shifts) .

For the latter the efficient LINADD optimizations are implemented. Also SHOGUN offers the freedom of working with custom pre-computed kernels. One of its key features is the combined kernel which can be constructed by a weighted linear combination of a number of sub-kernels, each of which not necessarily working on the same domain. An optimal sub-kernel weighting can be learned using Multiple Kernel Learning. Currently SVM one-class, 2-class, multi-class classification and regression problems are supported. However SHOGUN also implements a number of linear methods like

- Linear Discriminant Analysis (LDA)

- Linear Programming Machine (LPM),

- Perceptrons and features algorithms to train Hidden Markov Models.

The input feature-objects can be read from plain ascii files (tab separated values for dense matrices; for sparse matrices libsvm/svmlight format), a efficient native binary format and general support to the hdf5 based format, supporting

- dense

- sparse or

- strings of various types

that can often be converted between each other. Chains of preprocessors (e.g. subtracting the mean) can be attached to each feature object allowing for on-the-fly pre-processing.

Structure and Interfaces

SHOGUN's core is implemented in C++ and is provided as a library libshogun to be readily usable for C++ application developers. Its common interface functions are encapsulated in libshogunui, such that only minimal code (like setting or getting a double matrix to/from the target language) is necessary. This allowed us to easily create interfaces to Matlab(tm), R, Octave and Python. (note that a modular object oriented and static interfaces are provided to r, octave, matlab, python, python_modular, r_modular, octave_modular, cmdline, libshogun).

Application

We have successfully applied SHOGUN to several problems from computational biology, such as Super Family classification, Splice Site Prediction, Interpreting the SVM Classifier, Splice Form Prediction, Alternative Splicing and Promoter Prediction. Some of them come with no less than 10 million training examples, others with 7 billion test examples.

Documentation

We use Doxygen for both user and developer documentation which may be read online here. More than 600 documented examples for the interfaces python_modular, octave_modular, r_modular, static python, static matlab and octave, static r, static command line and C++ libshogun developer interface can be found in the documentation.

- Changes to previous version:

This release features 8 successful Google Summer of Code projects and it is the result of an incredible effort by our students. All projects come with very cool ipython-notebooks that contain background, code examples and visualizations. These can be found on our webpage!

Features

- In addition, the following features have been added:

- Added method to importance sample the (true) marginal likelihood of a Gaussian Process using a posterior approximation.

- Added a new class for classical probability distribution that can be sampled and whose log-pdf can be evaluated. Added the multivariate Gaussian with various numerical flavours.

- Cross-validation framework works now with Gaussian Processes

- Added nu-SVR for LibSVR class

- Modelselection is now supported for parameters of sub-kernels of combined kernels in the MKL context. Thanks to Evangelos Anagnostopoulos

- Probability output for multi-class SVMs is now supported using various heuristics. Thanks to Shell Xu Hu.

- Added an "equals" method to all Shogun objects that recursively compares all registered parameters with those of another instance -- up to a specified accuracy.

- Added a "clone" method to all Shogun objects that creates a deep copy

- Multiclass LDA. Thanks to Kevin Hughes.

- Added a new datatype, complex128_t, for complex numbers. Math functions, support for SGVector/Matrix, SGSparseVector/Matrix, and serialization with Ascii and Xml files added. [Soumyajit De].

- Added mini-framework for numerical integration in one variable. Implemented Gauss-Kronrod and Gauss-Hermite quadrature formulas.

- Changed from configure script to CMake by Viktor Gal.

- Add C++0x and C++11 cmake detection scripts

- ND-Array typmap support for python and octave modular.

Bugfixes

Fix json serialization.

Fixed bugs in FITC inference method that caused wrong posterior results.

Fixed bugs in GP Regression that caused negative values for the variances.

Fixed two memory errors in the streaming-features framework.

Fixed bug in the Kernel Mean Matching implementation (thanks to Meghana Kshirsagar).

Bugfixes Cleanups and API Changes:

Switch compile system to cmake

SGSparseVector/Matrix are now derived from SGReferenceData and thus refcounted.

Move README and INSTALL files to top level directory.

Use common RefCount class for ReferencedData and CSGObjects.

Rename HMSVMLabels to SequenceLabels

Refactored method to fit a sigmoid to SVM scores, now in CStatistics, still called from CBinaryLabels.

Use Dynamic arrays to hold preprocessors in features instead of raw pointers.

Use Dynamic arrays to hold Features in CombinedFeatures.

Use Dynamic arrays to hold Kernels in CombinedKernels/ProductKernels.

Use Eigen3 for GPs, LDA

- BibTeX Entry: Download

- Corresponding Paper BibTeX Entry: Download

- Supported Operating Systems: Cygwin, Linux, Macosx, Bsd

- Data Formats: Plain Ascii, Svmlight, Binary, Fasta, Fastq, Hdf

- Tags: Bioinformatics, Large Scale, String Kernel, Kernel, Kernelmachine, Lda, Lpm, Matlab, Mkl, Octave, Python, R, Svm, Sgd, Icml2010, Liblinear, Libsvm, Multiple Kernel Learning, Ocas, Gaussian Processes, Reg

- Archive: download here

Comments

-

- Soeren Sonnenburg (on September 12, 2008, 16:14:36)

- In case you find bugs, feel free to report them at [http://trac.tuebingen.mpg.de/shogun](http://trac.tuebingen.mpg.de/shogun).

-

- Tom Fawcett (on January 3, 2011, 03:20:48)

- You say, "Some of them come with no less than 10 million training examples, others with 7 billion test examples." I'm not sure what this means. I have problems with mixed symbolic/numeric attributes and the training example sets don't fit in memory. Does SHOGUN require that training examples fit in memory?

-

- Soeren Sonnenburg (on January 14, 2011, 18:12:01)

- Shogun does not necessarily require examples to be in memory (if you use any of the FileFeatures). However, most algorithms within shogun are batch type - so using the non in-memory FileFeatures would probably be very slow. This does not matter for doing predictions of course, even though the 7 billion test examples above referred to predicting gene starts on the whole human genome (in memory ~3.5GB and a context window of 1200nt was shifted around in that string). In addition one can compute features (or feature space) on-the-fly potentially saving lots of memory. Not sure how big your problem is but I guess this is better discussed on the shogun mailinglist.

-

- Yuri Hoffmann (on September 14, 2013, 17:12:16)

- cannot use the java interface in cygwin (already reported on github) nor in debian.

Leave a comment

You must be logged in to post comments.

Project details for SHOGUN