-

- Description:

Overview

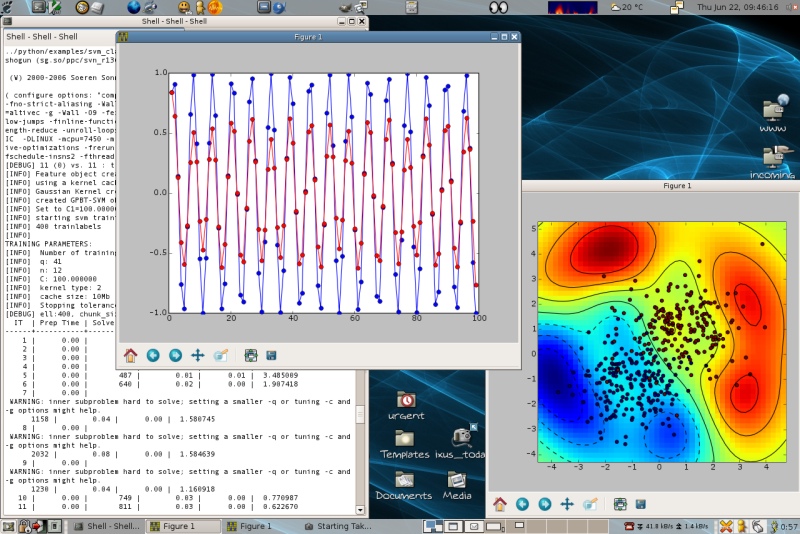

The SHOGUN machine learning toolbox's focus is on large scale kernel methods and especially on Support Vector Machines (SVM). It comes with a generic interface for kernel machines and features 15 different SVM implementations that all access features in a unified way via a general kernel framework or in case of linear SVMs so called "DotFeatures", i.e., features providing a minimalistic set of operations (like the dot product).

Features

SHOGUN includes the LinAdd accelerations for string kernels and the COFFIN framework for on-demand computing of features for the contained linear SVMs. In addition it contains more advanced Multiple Kernel Learning, Multi Task Learning and Structured Output learning algorithms and other linear methods. SHOGUN digests input feature-objects of basically any known type, e.g., dense, sparse or variable length features (strings) of any type char/byte/word/int/long int/float/double/long double.

The toolbox provides efficient implementations to 35 different kernels among them the

- Linear,

- Polynomial,

- Gaussian and

- Sigmoid Kernel

and also provides a number of recent string kernels like the

- Locality Improved,

- Fischer,

- TOP,

- Spectrum,

- Weighted Degree Kernel (with shifts) .

For the latter the efficient LINADD optimizations are implemented. Also SHOGUN offers the freedom of working with custom pre-computed kernels. One of its key features is the combined kernel which can be constructed by a weighted linear combination of a number of sub-kernels, each of which not necessarily working on the same domain. An optimal sub-kernel weighting can be learned using Multiple Kernel Learning. Currently SVM one-class, 2-class, multi-class classification and regression problems are supported. However SHOGUN also implements a number of linear methods like

- Linear Discriminant Analysis (LDA)

- Linear Programming Machine (LPM),

- Perceptrons and features algorithms to train Hidden Markov Models.

The input feature-objects can be read from plain ascii files (tab separated values for dense matrices; for sparse matrices libsvm/svmlight format), a efficient native binary format and general support to the hdf5 based format, supporting

- dense

- sparse or

- strings of various types

that can often be converted between each other. Chains of preprocessors (e.g. subtracting the mean) can be attached to each feature object allowing for on-the-fly pre-processing.

Structure and Interfaces

SHOGUN's core is implemented in C++ and is provided as a library libshogun to be readily usable for C++ application developers. Its common interface functions are encapsulated in libshogunui, such that only minimal code (like setting or getting a double matrix to/from the target language) is necessary. This allowed us to easily create interfaces to Matlab(tm), R, Octave and Python. (note that a modular object oriented and static interfaces are provided to r, octave, matlab, python, python_modular, r_modular, octave_modular, cmdline, libshogun).

Application

We have successfully applied SHOGUN to several problems from computational biology, such as Super Family classification, Splice Site Prediction, Interpreting the SVM Classifier, Splice Form Prediction, Alternative Splicing and Promoter Prediction. Some of them come with no less than 10 million training examples, others with 7 billion test examples.

Documentation

We use Doxygen for both user and developer documentation which may be read online here. More than 600 documented examples for the interfaces python_modular, octave_modular, r_modular, static python, static matlab and octave, static r, static command line and C++ libshogun developer interface can be found in the documentation.

- Changes to previous version:

This release contains several enhancements, cleanups and bugfixes:

Features

- This release contains first release of Efficient Dimensionality Reduction Toolkit (EDRT).

- Support for new SWIG -builtin python interface feature (SWIG 2.0.4 is required now).

- EDRT algorithms are now available using static interfaces such as matlab and octave.

- Jensen-Shannon kernel and Homogeneous kernel map preprocessor (thanks to Viktor Gal).

- New 'multiclass' module for multiclass classification algorithms, generic linear and kernel multiclass machines, multiclass LibLinear and OCAS wrappers, new rejection schemes concept by Sergey Lisitsyn.

- Various multitask learning algorithms including L1/Lq multitask group lasso logistic regression and least squares regression, L1/L2 multitask tree guided group lasso logistic regression and least squares regression, trace norm regularized multitask logistic regression, clustered multitask logistic regression and L1/L2 multitask group logistic regression by Sergey Lisitsyn.

- Group and tree-guided logistic regression for binary and multiclass problems by Sergey Lisitsyn.

- Mahalanobis distance, QDA, Stochastic Proximity Embedding, generic OvO multiclass machine and CoverTree & KNN integation (thanks to Fernando J. Iglesias Garcia).

- Structured output learning framework by Fernando J. Iglesias Garcia.

- Hidden markov support vector machine structured output model by Fernando J. Iglesias Garcia.

- Implementations of three Bundle method for risk minimization (BMRM) variants by Michal Uricar.

- Latent SVM framework and latent detector example by Viktor Gal.

- Gaussian processes framework for parameters selection and gaussian processes regression estimation framework by Jacob Walker.

- New graphical python modular examples.

- Standard Cross-Validation splitting for regression problems by Heiko Strathmann

- New data-locking concept by Heiko Strathmann which allows to tell machines that data is not going to change during training/testing until unlocked. KernelMachines now make use of that by not recomputing kernel matrix in cross-validation.

- Cross-validation for KernelMachines is now parallelized.

- Cross-validation is now possible with custom kernels.

- Features may now have arbritarily many index subsets (of subsets (of subsets (...))).

- Various clustering measures, Least Angle Regression and new multiclass strategies concept (thanks to Chiyuan Zhang).

- A bunch of multiclass learning algorithms including the ShareBoost algorithm, ECOC framework, conditional probability tree, balanced conditional probability tree, random conditional probability tree and relaxed tree by Chiyuan Zhang.

- Python Sparse matrix typemap for octave modular interface (thanks to Evgeniy Andreev).

- Newton SVM port (thanks to Harshit Syal).

- Some progress on native windows compilation using cmake and mingw-w64 (thanks to Josh aka jklontz).

- CMake compilation improvements (thanks to Eric aka yoo).

Bugfixes

- Fix for bug in the Gaussian Naive Bayes classifier, its domain was changed to log-space.

- Fix for R_static interface installation (thanks Steve Lianoglou).

- SVMOcas memsetting and max_train_time bugfix.

- Various fixes for compile errors with clang.

- Stratified-cross-validation now used different indices for each run.

Cleanup and API Changes

- Various code cleanups by Evan Shelhamer

- Parameter migration framework by Heiko Strathmann. From now on, changes in the shogun objects will not break loading old serialized files anymore

- BibTeX Entry: Download

- Corresponding Paper BibTeX Entry: Download

- Supported Operating Systems: Cygwin, Linux, Macosx, Bsd

- Data Formats: Plain Ascii, Svmlight, Binary, Fasta, Fastq, Hdf

- Tags: Bioinformatics, Large Scale, String Kernel, Kernel, Kernelmachine, Lda, Lpm, Matlab, Mkl, Octave, Python, R, Svm, Sgd, Icml2010, Liblinear, Libsvm, Multiple Kernel Learning, Ocas, Gaussian Processes, Reg

- Archive: download here

Comments

-

- Soeren Sonnenburg (on September 12, 2008, 16:14:36)

- In case you find bugs, feel free to report them at [http://trac.tuebingen.mpg.de/shogun](http://trac.tuebingen.mpg.de/shogun).

-

- Tom Fawcett (on January 3, 2011, 03:20:48)

- You say, "Some of them come with no less than 10 million training examples, others with 7 billion test examples." I'm not sure what this means. I have problems with mixed symbolic/numeric attributes and the training example sets don't fit in memory. Does SHOGUN require that training examples fit in memory?

-

- Soeren Sonnenburg (on January 14, 2011, 18:12:01)

- Shogun does not necessarily require examples to be in memory (if you use any of the FileFeatures). However, most algorithms within shogun are batch type - so using the non in-memory FileFeatures would probably be very slow. This does not matter for doing predictions of course, even though the 7 billion test examples above referred to predicting gene starts on the whole human genome (in memory ~3.5GB and a context window of 1200nt was shifted around in that string). In addition one can compute features (or feature space) on-the-fly potentially saving lots of memory. Not sure how big your problem is but I guess this is better discussed on the shogun mailinglist.

-

- Yuri Hoffmann (on September 14, 2013, 17:12:16)

- cannot use the java interface in cygwin (already reported on github) nor in debian.

Leave a comment

You must be logged in to post comments.

Project details for SHOGUN